INSIGHTS Opinion

By Julian Gaberle, 04/06/2021

Last month the Biden administration signed an Executive Order with the intention of reducing risks to financial stability and improving disclosure of climate related risks. A similar mandate was signed in the UK, where environmental and climate goals will now be explicitly considered by the Bank of England as part of monetary policy. Artificial Intelligence (AI) systems have been shown to have the potential to decouple economic growth from rising carbon emissions and environmental degradation; but what is the impact of the AI systems themselves on reaching a net-zero carbon economy?

AI systems have been used in a couple of ways to reduce carbon output. One method is to use AI in resource decoupling (reducing the resources used per unit of economic output), for example, by predicting crop yields or controlling heating and cooling of the built environment. Further, we have also seen impact decoupling. This decouples the economic output and environmental harm by using smarter planning systems. For example, AI systems have helped reduce economic emissions by forecasting energy usage, thus improving scheduling of renewable energy sources.

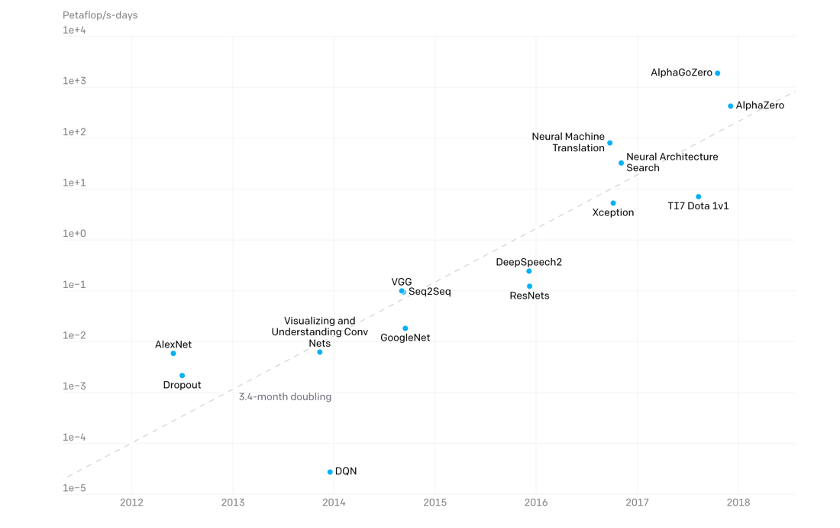

Figure 1: Demonstrating the increase in AI Model complexity over time. (Source: https://openai.com/)

Despite these advances, AI use cases have garnered significant notoriety for landmark developments in “state of the art” models such as GPT-3 (an AI model trained to understand language). The compute resources consumed to train a single model has been growing exponentially over the past decade (see Fig. 1) with a single training session of GPT-3 having an energy consumption equivalent to the yearly consumption of 126 Danish homes. Research and development of such models over multiple architectures and hyperparameters multiplies these costs by orders of magnitude, however, the true figure is hard to estimate due to lack of disclosure. Furthermore, such huge models require specialised data warehouses and vast arrays of compute chips, limiting the accessibility of such technologies to few tech companies.

At the same time, researchers are investigating more efficient models and are continuously improving existing ones (see Fig. 2). Using existing hardware, improvements can be made to data handling, parallel processing and code efficiency. From a hardware perspective, intense research is being done on custom processing chips (e.g., Cerebras’ wafer chips or Google’s TPUs) which are optimised for specific AI applications and thus reduce the resources required to develop and deploy a model.

Figure 2: Model efficiency over time. (Source: https://openai.com/)

Several factors influence the environmental impact of AI systems: the location of the compute resources used for development; the local energy supply; the size of the dataset; and the hardware used to train the models. Just 10 years ago, 79% of computing was done in smaller computer centres and company servers, but since then a dramatic shift to the cloud has taken place – by 2018 a paper in Science estimated that 89% of data centre computing was done in large data centres. These large data centres use tailored chips, high-density storage, so-called virtual-machine software, ultrafast networking and customized airflow systems. Furthermore, they can be built at more suitable sites for large compute clusters such as near lakes or in cooler regions for cheaper, more efficient cooling.

The intended carbon reduction (side-)effects of the migration from on-premise, small data centres (‘on-prem’) to larger, more efficient, cloud based solutions has so far had a relatively small net effect; having these resources available has allowed more, and more complex research to be done, increasing demand. If current development towards ever larger AI models continues, resource consumption of AI systems will outstrip any gains in efficiency.

How does Arabesque AI seek to solve these problems? As a business, one of our core values is Sustainability. This extends to our models as well. We have migrated to our Cloud partner Google Cloud Platform from a previously On-Prem system in order to reap efficiency benefits. We are working hard to increase the efficiency of our models; within our Research and Engineering teams, this is a key objective for 2021. While our models are exceptionally complex, they will only remain this way, and likely become more so. We feel there is more work we can do around efficiency of the compute processes we use, which will realise both environmental as well as obvious economic benefits. We encourage other Machine Learning and AI reliant businesses to focus on these goals as well.